What is Docker?

Docker was originally started as an internal project by dotCloud founder Solomon Hykes while he was in France. It is based on years of innovation in dotCloud’s cloud services technology and was open-sourced under the Apache 2.0 licence in March 2013, with the main project code maintained on GitHub. The Docker project has since joined the Linux Foundation and founded the Open Container Initiative (OCI).

Docker has received a lot of attention and discussion since it was open-sourced, and its GitHub project has more than 57,000 stars and more than 10,000 forks to date. even due to the popularity of the Docker project, at the end of 2013, the dotCloud company decided to change its name to Docker. Docker was originally developed and implemented on Ubuntu 12.04; Red Hat has supported Docker since RHEL 6.5; and Google uses Docker extensively in its PaaS products.

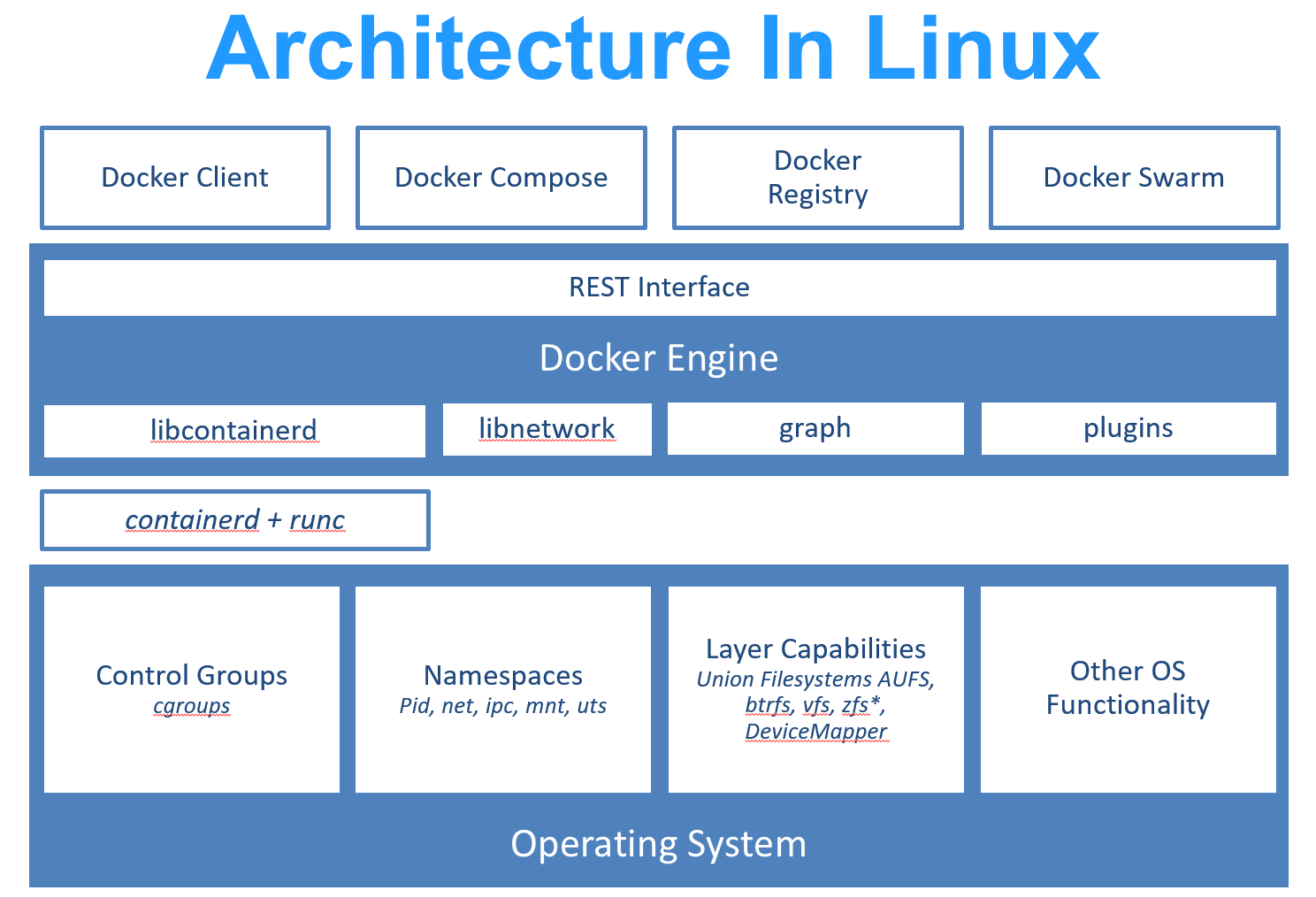

Docker is developed in Google’s Golang. Based on the Linux kernel’s cgroup, namespace, and OverlayFS, Union FS and other technologies, it encapsulates and isolates the processes, that belong to the virtualisation technology at the operating system level. Since isolated processes are independent of the host and other isolated processes, they are also called containers. The initial implementation was based on LXC, which was removed from version 0.7 onwards in favour of the self-developed libcontainer, and from version 1.11 onwards has evolved to use runC and containerd.

runC is a Linux command line tool for creating and running containers according to the OCI container runtime specification.

containerd is a daemon that manages the container lifecycle, providing a minimal feature set for executing containers and managing images on a single node.

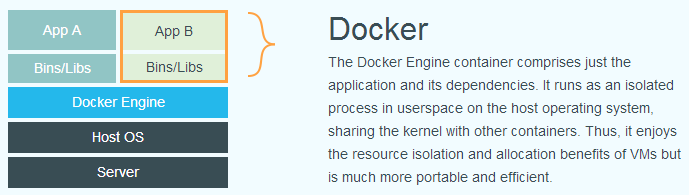

Docker on the basis of containers, and further encapsulation, from the file system, and network interconnection to process isolation and so on, greatly simplifies the creation and maintenance of containers. This makes Docker technology lighter and faster than virtual machine technology.

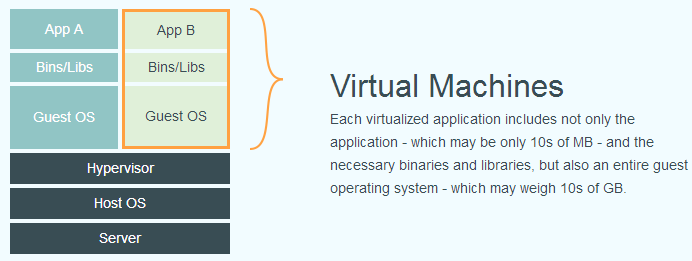

The following image compares the differences between Docker and traditional virtualisation approaches. Traditional virtual machine technology virtualises a set of hardware, runs a complete operating system on it, and then runs the required application processes on that system. Containers, on the other hand, run their application processes directly on the host’s kernel, so the container does not have its own kernel and does not virtualise the hardware. Therefore containers are much lighter than traditional virtual machines.

Why use Docker?

More efficient use of system resources

Because containers don’t require additional overheads such as hardware virtualisation and running a full operating system, Docker makes more efficient use of system resources. Whether it’s application execution speed, memory loss, or file storage speed, it’s more efficient than traditional virtual machine technology. As a result, an identically configured host can often run a greater number of applications than with virtual machine technology.

Faster startup times

While traditional virtual machine technology often takes minutes to start application services, Docker container applications, which run directly on the host kernel and do not require the full operating system to be started, can achieve startup times of seconds, or even milliseconds. This greatly saves time in development, testing, and deployment.

Consistent Runtime Environment

A common problem in the development process is environment consistency. Due to the inconsistency of development, testing and production environments, some bugs are not found during the development process. Docker’s image provides a complete runtime environment except for the kernel, which ensures the consistency of the application’s runtime environment so that problems such as “this code is fine on my machine” will no longer occur.

Continuous Delivery and Deployment

For DevOps staff, the most important thing is to create or configure an application once and have it work anywhere.

With Docker, you can achieve continuous integration, continuous delivery, and deployment by customising application images. Developers can use Dockerfile to build the image and combine it with a Continuous Integration system for integration testing, while operation and maintenance personnel can directly in the production environment to quickly deploy the image, and even combine it with a Continuous Delivery/Deployment system. Delivery/Deployment system for automated deployment.

Using Dockerfile makes image building transparent, so not only can the development team understand the environment in which the application is running, but also the operations team can understand the conditions required for the application to run, helping to better deploy the image in the production environment.

Easier Migration

Because Docker ensures the consistency of execution environments, it makes it easier to migrate applications. Docker can run on many platforms, whether they are physical machines, virtual machines, public clouds, private clouds, or even laptops, and the results are consistent. Therefore, users can easily migrate applications running on one platform to another without worrying about changes in the operating environment that prevent the application from running properly.

Easier Maintenance and Scaling

Docker’s use of tiered storage and mirroring technology makes it easier to reuse repetitive parts of an application and makes it easier to maintain and update the application, and to further extend the image based on the base image. In addition, the Docker team together with various open-source project teams maintains a large number of high-quality official images, that can be used directly in the production environment, but also can be used as the basis for further customisation, greatly reducing the cost of image production of application services.

0 Comments